Learning Outcomes

In response to the UNC Strategic Plan and as an ongoing element in ECU’s long-standing commitment to designing and operating educational programs characterized by an evidence-based culture, we have provided expected student learning outcomes for each of the college’s degree or certificate programs. These expected student learning outcomes describe the knowledge, skills, and dispositions students will achieve upon successful completion of the program.

Visit the Learning Outcomes website to select an undergraduate or graduate degree or certificate program to link to that program’s expected student learning outcomes. Identifying these outcomes and making them easily available to students, faculty, administrators, and the full range of the university’s external stakeholders represents an important element of ECU’s commitment to assuring the highest quality educational experience possible for our students.

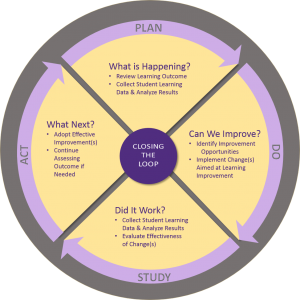

Closing the Loop

“Closing the loop refers to collecting, analyzing, and reporting assessment data as part of a continuous improvement process. You must collect data and close the loop for each learning objective developed for a program. Measure twice, close once [at a minimum in the 5-year cycle]. Actions that demonstrate closing the loop: Collect and analyze data; Disseminate results; Discuss results publicly with stakeholders; Identify and prioritize improvements; Focus on students not faculty; Make the process continuous; Document changes.” – Dr. Karen Tarnoff, ETSU

Data collected must:

- be a direct measure of individual student performance.

- be suitable for making judgments about student performance related to the objective (e.g., quiz adequately covers content or rubric items capture adequate information).

- reflect a representative sample of students in the program.

- be of a statistically adequate sample size.*

- reflect substantially similar measurement to any prior data collection for the objective (i.e., the rubric/quiz items).

Analysis of data/results must:

- involve a representative group of the faculty.

- be based on a rubric or quiz that provides detail level item information, such as quiz question/rubric item, rather than solely one overall measure.

- involve a cross-section of item-level student performance, not solely individual overall performance on a quiz or rubric.

- identify areas of student strength and areas of student weakness revealed by the assessment data.

- reflect substantially similar analysis to any prior data analysis for the objective.

Identified Improvement Action must:

- be decided by a representative group of the faculty.

- be related to identified area(s) of weakness based on the analysis of data collected.

- be specific, measurable, and assignable to one or more individuals for implementation.

- involve a change in pedagogy for one or more specific courses in the program AND/OR involve a change in the program (add or delete courses, change sequence, add or delete prerequisites, etc.).

- impact on a critical mass of students in the program (ideally all students in the program).

- be implemented prior to data collection for the next reporting period.

- be measurable for impact within the period required for closing the loop.

Notes:

A system improvement does not count toward loop closure and, depending on the change, may require starting the loop cycle over again. These types of improvements typically involve actions such as:

- modifying a rubric or using a completely new rubric,

- changing an objective or creating a new objective,

- substantially changing a criterion for success,

- substantially changing the way data are collected or analyzed (e.g., moving from a single individual scoring system to a group average scoring system).

Monitoring and re-collection of data without an improvement does not close the loop.

*Typically, absolute minimum of 50 or 20%, whichever is larger. (Plan to collect 75/25% minimum to account for the unexpected). For data groups smaller than 50 (e.g., due to low program enrollments) collect 100% sample and follow AOL Guidelines for Small Data Sets (PDF).